How It Works

Models are presented with scanned pages containing handwritten editorial marks and are asked to identify each edit, its type, location, and the text before and after the edit.

The Task

Each page in the benchmark consists of printed text overlaid with

handwritten editorial annotations. Models are tasked with detecting and

interpreting all such editorial corrections and outputting them in

structured JSON format.

For each correction, the model must identify:

- Type of edit: One of

insertion,deletion,replacement,punctuation,capitalization, oritalicize - Original text: The text as it appeared before the edit

- Corrected text: The intended version after applying the edit

- Line number: The line on which the edit occurs. Use line 0 for titles or headings, and start counting full lines of body text from line 1

- Page: A known identifier for the image (e.g.,

"001.png"), provided alongside the input and not extracted by the model

Models should infer the intent behind handwritten annotations using both visual and textual cues. Common markup conventions include:

- Insertions: Indicated by caret marks (

^) or added words between lines - Deletions: Shown using strikethroughs or crossed-out text

- Replacements: Circled, underlined, or bracketed text with substitutions nearby

- Punctuation edits: Handwritten punctuation added, removed, or modified

- Capitalization: Case changes marked explicitly or via notation

- Italicize: Text that should be formatted in italics, typically indicated by underlining or special notation

This task combines fine-grained visual recognition with natural language understanding and domain knowledge of editorial conventions. The goal is not just OCR or layout detection, but true interpretation of handwritten edits in context.

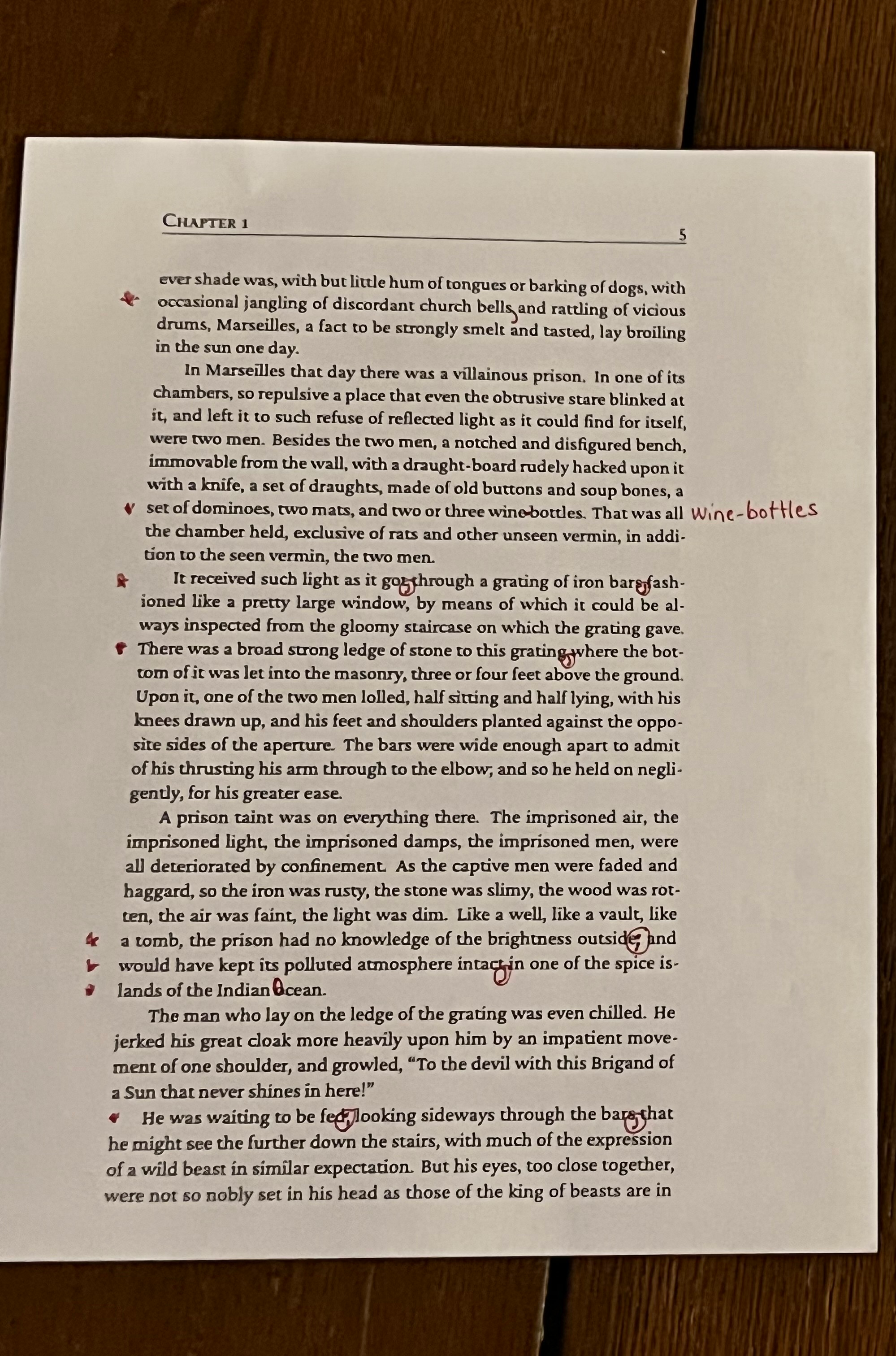

Example Input

Expected Output

For the example above, models should identify all ten editorial corrections, producing output like:

{

"image": "001.png",

"page_number": 5,

"source": "Little Dorrit",

"annotator": "pairsys",

"annotation_date": "2025-04-04",

"verified": true,

"edits": [

{

"type": "punctuation",

"original_text": "church bells",

"corrected_text": "church bells,",

"line_number": 2,

"page": "001.png"

},

{

"type": "punctuation",

"original_text": "wine bottles",

"corrected_text": "wine-bottles",

"line_number": 11,

"page": "001.png"

},

{

"type": "punctuation",

"original_text": "got through",

"corrected_text": "got, through",

"line_number": 14,

"page": "001.png"

},

{

"type": "punctuation",

"original_text": "iron bars fashioned",

"corrected_text": "iron bars, fashioned",

"line_number": 14,

"page": "001.png"

},

{

"type": "punctuation",

"original_text": "grating where",

"corrected_text": "grating, where",

"line_number": 17,

"page": "001.png"

},

{

"type": "punctuation",

"original_text": "outside and",

"corrected_text": "outside; and",

"line_number": 29,

"page": "001.png"

},

{

"type": "punctuation",

"original_text": "intact in",

"corrected_text": "intact, in",

"line_number": 30,

"page": "001.png"

},

{

"type": "capitalization",

"original_text": "indian ocean",

"corrected_text": "Indian Ocean",

"line_number": 31,

"page": "001.png"

},

{

"type": "punctuation",

"original_text": "was waiting to be fed looking",

"corrected_text": "was waiting to be fed; looking",

"line_number": 36,

"page": "001.png"

},

{

"type": "punctuation",

"original_text": "bars that",

"corrected_text": "bars, that",

"line_number": 36,

"page": "001.png"

}

]

}Last updated: 2025-04-04